Publications

2025

AAM-SEALS: Developing Aerial-Aquatic Manipulators in SEa, Air, and Land Simulator ICRA-25 Workshop

NatSGLD: A Dataset with Speech, Gesture, Logic, and Demonstration for Robot Learning in Natural Human-Robot Interaction HRI-25

2024

"Task Success" is not Enough: Investigating the Use of Video-Language Models as Behavior Critics for Catching Undesirable Agent Behaviors COLM-24

Learning from Ambiguous Demonstrations with Self-Explanation Guided Reinforcement Learning AAAI-24

2022

Symbols as a Lingua Franca for Bridging Human-AI Chasm for Explainable and Advisable AI Systems AAAI-22

2021

Contrastively Learning Visual Attention as Affordance Cues from Demonstrations for Robotic Grasping IROS-21

2019

Explicability as Minimizing Distance from Expected Behavior AAMAS-19

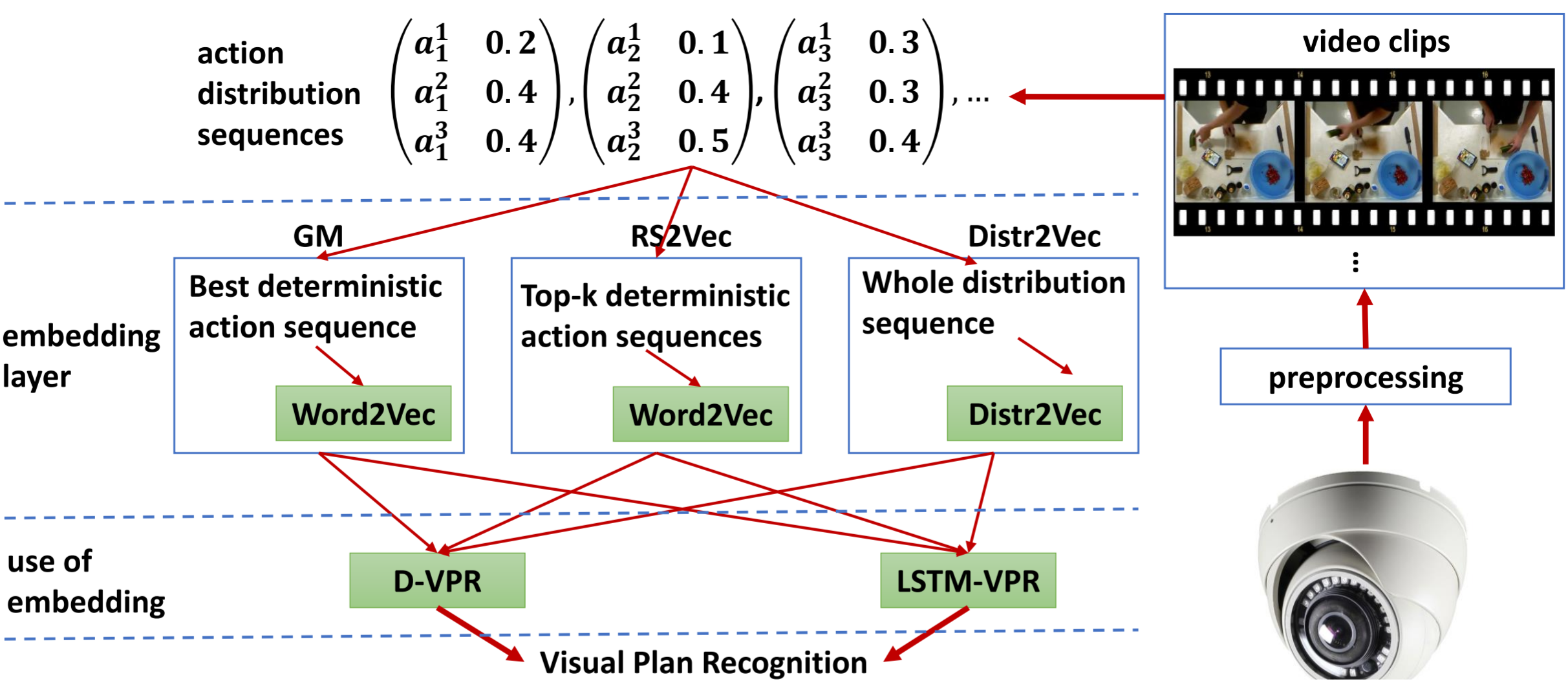

Discovering Underlying Plans Based on Shallow Models ACM-TIST-19

Plan-Recognition-Driven Attention Modeling for Visual Recognition AAAI-19 Workshop

2018